CAIDA/TrustML Workshop – ICML Visitors @ UBC, July 2025

July 15, 2025, 9:30 am to 5:00 pm

KAIS 2020/2030

For more information please visit: https://caida.ubc.ca/event/caidatrustml-2025-icml-visits

Speakers Accepted Posters Organizers Pictures

Speakers

Accepted Posters

- Jingjing Zheng, The University of British Columbia: "Handling The Non-Smooth Challenge in Tensor SVD: A Multi-Objective Tensor Recovery Framework"

- Abraham Chan, The University of British Columbia: "Hierarchical Unlearning Framework for Multi-Class Classification"

- Tony Mason, The University of British Columbia: "Beyond Constraint: Emergent AI Alignment Through Narrative Coherence in the Mallku Protocol"

- Yash Mali, The University of British Columbia: "Natural language interface for medical guidelines"

- Gargi Mitra, The University of British Columbia: "Learning from the Good Ones: Risk Profiling-Based Defenses Against Evasion Attacks on DNNs"

- Harshinee Sriram, The University of British Columbia: "Multimodal Classification of Alzheimer’s Disease by Combining Facial and Eye-Tracking Data"

- Sky Kehan Sheng, The University of British Columbia: "The erasure of intensive livestock farming in text-to-image generative AI"

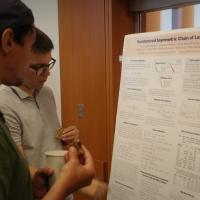

- Grigory Malinovsky, King Abdullah University of Science and Technology: "Randomized Asymmetric Chain of LoRA"

- Nicholas Richardson, The University of British Columbia: "Unsupervised Signal Demixing with Tensor Factorization"

- Michael Tegegn, The University of British Columbia: "The Illusion of Success: Value of Learning-Based Android Malware Detectors"

- Yingying Wang, The University of British Columbia: "Student Experience of Using Generative AI in a Software Engineering Course — A Mixed-Methods Analysis"

- Sarah Dagger, The University of British Columbia: "Explain Before You Patch: Generating Reliable Bug Explanations with LLMs and Program Analysis"

- Masih Beigi Rizi, The University of British Columbia: "Quantifying Prompt Ambiguity in Large Language Models"

- Mohamad Chehade, University of Texas at Austin: "LEVIS: Large Exact Verifiable Input Spaces for Neural Networks"